How to Prevent Unwanted Query Strings from Being Crawled in WordPress (Without Redirects)

If you’ve noticed lots of strange URLs showing up in your Google Search Console reports URLs with tracking parameters like ?_gl=… or ?_bd_prev_page=… — you’re not alone. These URLs often cause SEO headaches, such as duplicate content issues and wasted crawl budget.

In this comprehensive guide, I’ll explain why these URLs appear, why they’re a problem, and how you can stop search engines from crawling and indexing them on your WordPress site running LiteSpeed all without using redirects.

Why Are Tracking Query Strings a Problem?

Tracking parameters like _gl and _bd_prev_page are typically added by marketing or analytics tools. While these help you track traffic sources and user behavior, they create multiple URL versions of the same content, leading to:

- Duplicate content: Search engines see the same page multiple times under different URLs.

- Crawl budget waste: Googlebot spends time crawling duplicate URLs instead of your important pages.

- Diluted SEO value: Backlinks and ranking signals may split across URL variations.

Controlling how these URLs are crawled is critical for maintaining your site’s SEO health.

Why Robots.txt Alone Isn’t Enough

Robots.txt works by blocking crawling based on URL paths, but it can’t block URLs with specific query strings (the part after ?).

For example, disallowing /detail.php blocks:

arduino

CopyEdit

https://example.com/detail.php

But not:

arduino

CopyEdit

https://example.com/detail.php?_gl=abc123

Google will still crawl and index these URLs unless you explicitly tell it not to.

The Best Solution: Add Meta Robots Noindex Tag for URLs with Query Strings

How Does It Work?

You want Google to crawl your main URLs but not index versions with tracking parameters. The way to do this is by adding a meta robots tag like this on those pages:

html

CopyEdit

<meta name=”robots” content=”noindex, follow”>

This tells search engines:

- Don’t index this page (to avoid duplicate content)

- Still follow links on this page (so link equity flows)

How to Implement in WordPress

Add the following snippet to your active theme’s functions.php file or a site-specific plugin:

php

CopyEdit

function add_noindex_for_tracking_urls() {

if (isset($_GET[‘_gl’]) || isset($_GET[‘_bd_prev_page’])) {

echo ‘<meta name=”robots” content=”noindex, follow”>’;

}

}

add_action(‘wp_head’, ‘add_noindex_for_tracking_urls’);

This automatically inserts the meta tag on any page whose URL contains _gl or _bd_prev_page.

Why Redirecting Too Many URLs Can Hurt Your Crawl Budget and SEO

It’s tempting to fix unwanted URLs by redirecting them to clean URLs (for example, redirecting https://example.com/detail.php?_gl=abc123 to https://example.com/detail.php).

But excessive or improper redirects can actually harm your SEO and crawl efficiency:

What Happens When You Redirect Too Many URLs?

- Crawl budget waste: Every redirect counts as an extra HTTP request. When Googlebot encounters a redirect, it has to crawl the original URL, get redirected, and then crawl the destination URL. This means double crawling effort for the same content.

- Delayed indexing: Redirect chains or loops slow down Googlebot, reducing the number of important pages it crawls and indexes in a given time. Your essential pages might get crawled less often or later.

- Possible ranking drops: Frequent or long redirect chains dilute page authority and user experience signals. Google may lower rankings if redirects are excessive or confusing.

Example Scenario

Imagine your site generates thousands of URLs with tracking parameters daily. If you set up redirects for all these URLs, Googlebot has to:

- Crawl the URL with tracking parameters.

- Get redirected to the clean URL.

- Crawl the clean URL.

This doubles (or more) the crawl requests needed just for one page, causing Google to spend precious crawl budget on redundant URLs instead of discovering new or updated content.

Optimizing .htaccess and Robots.txt to Avoid Redirect Overuse

Instead of redirecting every URL with a tracking parameter, combine these approaches:

- Use meta robots noindex tags on URLs with tracking parameters (as described above).

- Use robots.txt to disallow crawling of known unnecessary URL paths.

- Keep .htaccess clean — avoid redirect rules targeting query strings unless absolutely necessary.

This way, Googlebot spends crawl budget more efficiently, focusing on important pages without being caught in redirects.

Optimizing Your .htaccess File for WordPress + LiteSpeed

Your .htaccess file manages how URLs are processed on your server. It should be clean and follow best practices, especially if you’re using LiteSpeed caching.

Here’s a streamlined example:

apache

CopyEdit

# BEGIN LSCACHE

# END LSCACHE

# BEGIN NON_LSCACHE

# END NON_LSCACHE

# BEGIN WordPress

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteRule .* – [E=HTTP_AUTHORIZATION:%{HTTP:Authorization}]

RewriteBase /

RewriteRule ^index\.php$ – [L]

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_FILENAME} !-d

RewriteRule . /index.php [L]

</IfModule>

# END WordPress

# BEGIN LiteSpeed

<IfModule Litespeed>

SetEnv noabort 1

</IfModule>

# END LiteSpeed

Additional Best Practices

Use robots.txt to disallow entire URL paths you never want indexed:

makefile

CopyEdit

User-agent: *

Disallow: /detail.php

Disallow: /shopdetail/

Disallow: /pcmypage

- Keep your SEO plugin (Rank Math, Yoast SEO) updated — some offer noindex controls for parameters.

- Regularly monitor Google Search Console for new unwanted URLs.

- Always backup functions.php and .htaccess before making changes.

Frequently Asked Questions (FAQs)

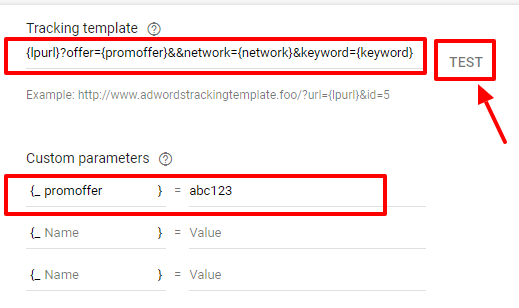

Q1: Can I block query strings directly in robots.txt?

No. Robots.txt can only block URL paths, not specific query parameters. To control query strings, use meta robots tags or URL parameter handling in Google Search Console.

Q2: Will adding noindex affect my page rankings?

No, adding noindex to URLs with tracking parameters prevents duplicate content from being indexed, which actually helps your site’s SEO by consolidating ranking signals on main URLs.

Q3: What if my SEO plugin doesn’t have an option to noindex parameters?

You can manually add the meta robots tag via your theme’s functions.php as shown above. This is a lightweight and reliable method.

Q4: Should I redirect URLs with tracking parameters to clean URLs?

Redirects can work but may break tracking or user sessions. The noindex method is safer and keeps URLs intact for analytics. Also, excessive redirects waste crawl budget and can delay indexing.

Q5: How do I check if Google respects the noindex tag?

Use the URL Inspection tool in Google Search Console. It shows if the page is indexed or marked as noindex.

Final Thoughts

Managing crawling and indexing of URLs with tracking query strings is crucial for good SEO hygiene. By combining a clean .htaccess setup, proper robots.txt disallow rules for path blocking, and meta robots noindex tags for query strings, you’ll keep your site optimized, avoid duplicate content issues, and improve crawl efficiency all without frustrating redirects that waste your crawl budget.

If you want help implementing this or a full SEO audit, just reach out!